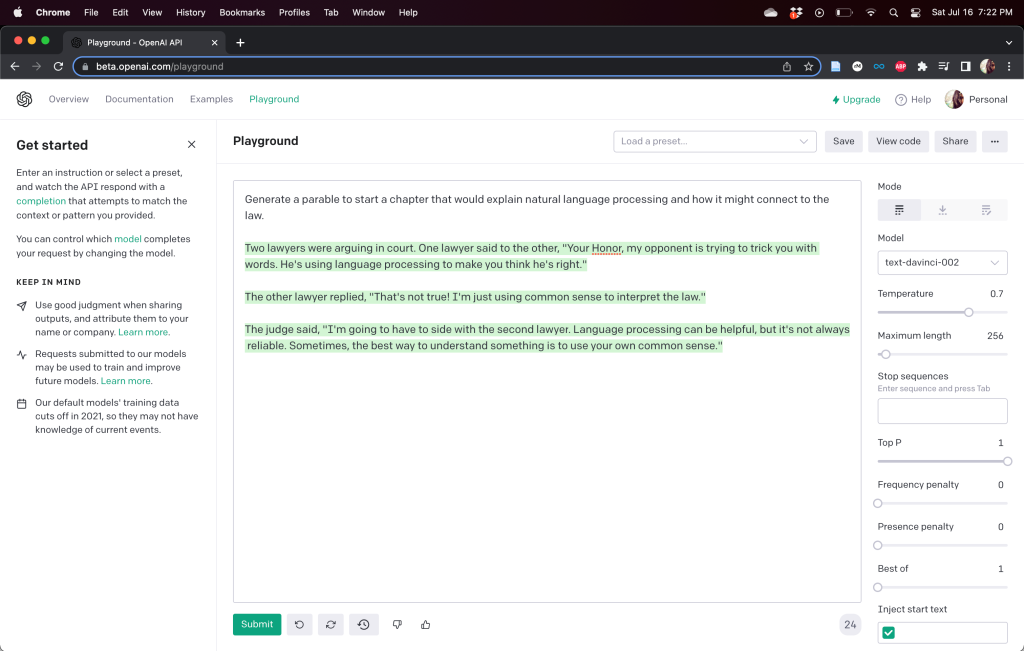

For my PhD thesis I asked several questions to GPT-3. Full discussion of GPT-3 is located in Chapter 8: Natural Language Processing. Following are a screenshot and recordings of the interactions:

First, I asked GPT-3 to: Generate a parable to start a chapter that would explain natural language processing and how it might connect to the law.

Then I went on to ask several questions, inspired by my taxonomy for cataloguing Rule of Law problems.

(a) Easy Answers to Hard Cases: What Process Dominates?

To begin the analysis, I asked the following question: What would happen if GPT-3 were used to generate laws and other legal regulations?

Then, I followed up, seeking more specificity about the treatment of hard cases: If GPT-3 were forced to answer a hard case, how should it respond?

Finally, I added more detail to the second question: Legal formalists describe a problem in legal theory called “hard cases.” In a hard case, the answer to the legal question is not obvious from existing case law, statutes, or common law principles. If GPT-3 were forced to answer a hard case, how should it respond?

(b) Acknowledging Contested Concepts: Which Values Dominate?

To begin the analysis, I asked the following question: Which values would guide GPT-3’s efforts to generate laws or other legal regulations?

Then, I followed up, seeking more specificity about the nature of contested concepts: How does GPT-3 handle situations when words have multiple meanings?

Finally, I added more detail to the second question: In legal theory, there is the idea of a contested concept. A contested concept is one that different people would characterize in different ways, meaning it could be said to have multiple meanings. How does GPT-3 handle situations when words have multiple meanings?

(c) Constructing Truth: Whose Story Dominates?

To begin the analysis, I asked the following question: Does GPT-3 consider one set of facts to construct the “ground truth”?

Then, I followed up, seeking more specificity about the way GPT-3 handles the notion of truth: How does GPT-3 determine what is “true” when generating text?

Finally, I added more detail to the second question: Sometimes, different people believe different sets of facts to be true. If different people tell different stories, this can result in different versions of truth. In light of this, how does GPT-3 determine what is “true” when generating text?